Azure B-Series v2: The Architect's Guide to Cost Optimization

Source: Microsoft (techcommunity.microsoft.com)

Introduction

In 2025, the landscape of enterprise technology is defined by a single, crushing economic metric: global public cloud spending is forecast to reach $723.4 billion, a 21.5% increase from the previous year.

However, detailed analysis suggests that a significant portion of this capital is inefficiently deployed. Industry data indicates that nearly 32% of all cloud budgets are wasted, primarily due to "zombie" infrastructure—servers provisioned for peak capacity but utilized at a fraction of their potential.

For the cloud architect, the challenge is no longer just about uptime; it is about economic efficiency. This article provides a comprehensive analysis of Microsoft Azure’s Burstable (B-family) v2 Virtual Machines and how they solve the "overprovisioning trap" for development and general-purpose workloads.

The Situation: The Economics of Waste

In a traditional on-premises data center, hardware was a sunk cost. Overprovisioning was a rational safety net. In the cloud, where billing is per-second, overprovisioning is a continuous hemorrhage of capital.

Consider a typical development server or a proof-of-concept environment. It is active during business hours and often sits idle at night. Even during active hours, CPU utilization rarely stays at 100%; it spikes during compilation or testing and then drops. Real-world telemetry often shows these servers averaging between 5% and 15% CPU utilization.

Yet, the vast majority of these workloads are hosted on General Purpose D-series instances, which charge for 100% of the CPU capacity, 24 hours a day. This disconnect between utility (what is used) and cost (what is paid) is the fundamental inefficiency that the Azure B-series is designed to solve.

The Action: Architecture of the B-Series v2

The Azure B-series (Burstable) fundamentally alters the compute contract. Instead of purchasing a fixed slice of a CPU, you purchase a baseline performance guarantee along with the right to burst to 100% using accumulated credits.

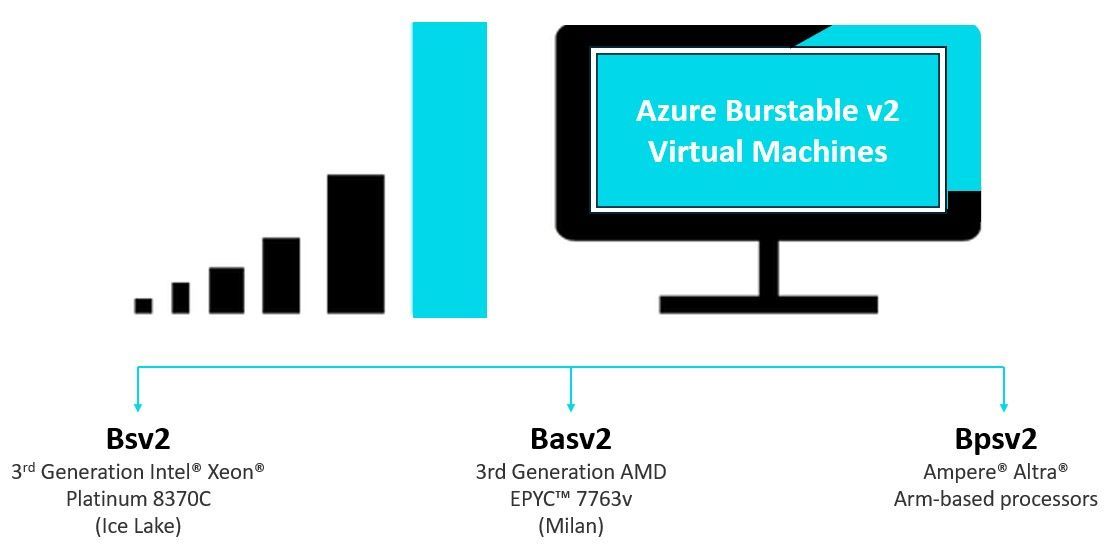

The v2 generation (Basv2, Bpsv2, Bsv2) represents a massive leap in capability over the legacy v1, addressing previous I/O bottlenecks.

1. The Credit Banker Model

Success with B-series requires understanding the "Credit Bank." It functions as a temporal arbitrage system governed by three variables:

- Base CPU Performance: The threshold below which credits are earned.

- Credits Banked: One credit equals one minute of 100% vCPU utilization.

- Credit Limit: The maximum "tank size" for the VM.

The Math: When a VM runs below its baseline, it accumulates credits based on the formula: $$ \text{Credits Accrued} = (\text{Base %} - \text{Actual %}) \times (\text{Number of vCPUs}) $$

For example, a Standard_Bas4_v2 (4 vCPUs) running at 10% load builds up a reservoir of CPU time. This reservoir is then expended automatically during traffic spikes or build processes.

2. The v2 Processor Landscape

The v2 series introduces architectural choice, allowing optimization based on instruction set and cost. The cost difference is significant when compared to the standard D-series equivalents.

- Basv2 (AMD EPYC™ Milan): Offers the best value for x86 workloads. High performance for standard applications and databases.

- Bpsv2 (Ampere® Altra® ARM): The lowest cost option. Ideal for containerized microservices and open-source stacks (Linux/Java/Node/Go), but requires ARM compatibility.

- Bsv2 (Intel® Xeon® Ice Lake): Best for legacy applications requiring specific Intel instruction sets or nested virtualization.

The Implementation Challenge: The "Missing Temp Disk"

Migration to B-series v2 is not a simple "right-click, resize" operation due to a critical architectural change: The v2 B-series does not include a local ephemeral SSD.

Legacy D-series VMs typically include a "free" local disk (usually D: on Windows or /mnt/resource on Linux). Applications often rely on this for swap files, tempdb, or build artifacts. The B-series v2 is "diskless," relying entirely on remote Managed Disks.

The Solution:

- Swap Files: You must reconfigure the OS to place the page/swap file on the OS disk or a dedicated attached data disk.

- Performance: To compensate for the loss of the low-latency local SSD, it is often recommended to upgrade the OS disk to Premium SSD. Because the Basv2 supports high remote storage throughput, this configuration often matches the I/O performance of the D-series while still offering significant compute savings.

The Result: Strategic Optimization

Moving workloads from fixed-performance VMs (D-series) to burstable VMs (Basv2/Bpsv2) typically results in a cost reduction of 40-50%, provided the workload fits the burst profile.

However, "Set and Forget" is dangerous. The B-series introduces the risk of Credit Exhaustion. If a workload runs above baseline for too long, credits reach zero, and the VM is throttled to its baseline (e.g., 20%).

Best Practice:

Do not deploy B-series without monitoring. Configure Azure Monitor Alerts to trigger when CPU Credits Remaining drops below 20%. This ensures you can resize the VM or investigate "noisy neighbor" processes before performance degrades.

By aligning infrastructure costs with the actual physics of the workload—paying for the average, not the peak—organizations can reclaim significant budget in 2025 without sacrificing capability.

Want to discuss this further?

I'm always happy to chat about cloud architecture and share experiences.