The AWS Outage That Broke the Internet: What Cloud Engineers Need to Know

Yesterday's 15-hour AWS outage wasn't just another technical glitch—it was a wake-up call for every organization betting their future on cloud infrastructure.

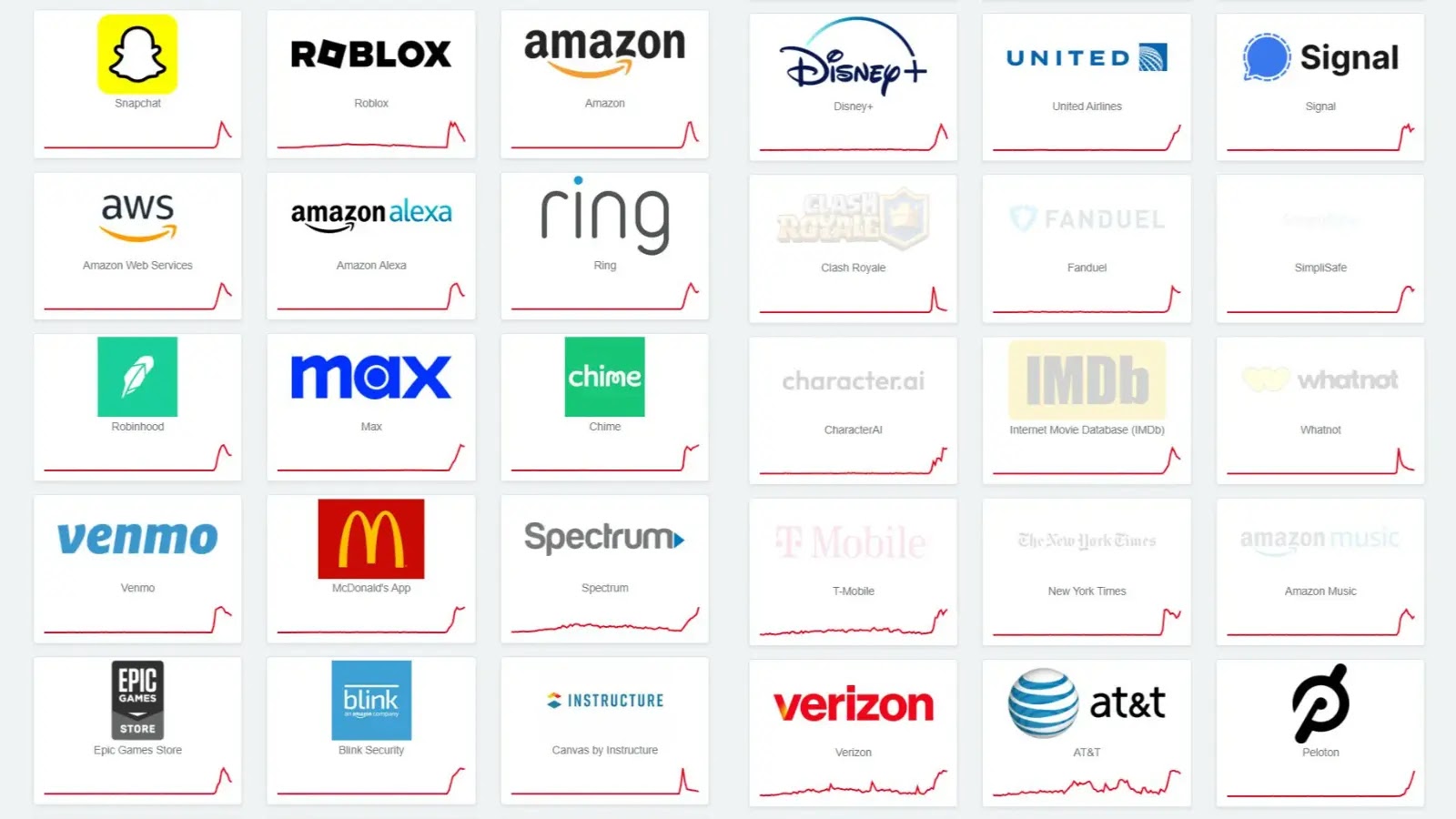

As a Cloud Solutions Architect who's spent years designing resilient systems across AWS, Azure and GCP, I watched this incident unfold with a mix of professional concern and personal reflection. The cascading failure that took down Snapchat, Reddit, Venmo, and over 1,000 other companies exposed vulnerabilities that many of us have been warning about for years.

What Actually Happened?

At 12:11 AM PDT on October 20, 2025, a DNS resolution failure in DynamoDB's API endpoints triggered what would become one of the longest AWS outages in history. Think of DNS as the internet's phone book—when it fails, servers can't find each other, authentication systems become unreachable, and microservices lose the ability to communicate.

The technical details matter here: This wasn't just a DynamoDB problem. The initial DNS issue cascaded through AWS's tightly coupled architecture, impacting an internal EC2 subsystem, then Network Load Balancers, then Lambda, CloudWatch, and SQS. Each failure amplified the next, creating a perfect storm that paralyzed 64+ AWS services.

The financial impact? Over $75 million per hour in direct losses. Amazon alone lost $72.8 million hourly. But the real cost—measured in lost productivity, delayed projects, and shaken customer confidence—runs into the hundreds of billions.

The US-EAST-1 Problem We Keep Ignoring

Here's what bothers me most: This is the fifth major US-EAST-1 outage since 2017.

US-EAST-1 (Northern Virginia) is AWS's oldest and largest region. It's also the default region for countless services and the home of critical global endpoints. When US-EAST-1 fails, workloads running in other regions can still experience issues due to hidden dependencies on global services like IAM and DynamoDB Global Tables.

We've known about this single point of failure for years, yet organizations continue concentrating their entire infrastructure in one region with one provider. Why? Because multi-cloud and multi-region architectures are complex and expensive.

But yesterday proved that the cost of not implementing these strategies is far higher.

Three Hard Truths Cloud Engineers Must Accept

1. Multi-Cloud Is No Longer Optional

I've heard every argument against multi-cloud: "It's too complex," "It costs too much," "We don't have the expertise."

After yesterday, those excuses won't hold up in your next board meeting.

Wall Street is already mandating multi-cloud strategies for financial institutions. Your customers are asking questions about your redundancy plans. Your competitors are diversifying their infrastructure.

Practical steps:

- Start with critical workloads—don't try to migrate everything at once

- Use containerization (Kubernetes) to maintain portability between providers

- Implement active-active architectures where workloads run simultaneously across AWS, Azure, and GCP

- Budget 15-20% additional infrastructure costs for multi-cloud redundancy (it's cheaper than a 15-hour outage)

2. Your Monitoring Is Probably Inadequate

During the December 2021 AWS outage, their own status dashboard initially showed no problems. You can't rely on your provider's monitoring alone.

What you need:

- Independent monitoring that aggregates metrics from all your cloud providers

- Real-time alerting that integrates with incident response platforms

- AI-driven anomaly detection to catch issues before they cascade

- Continuous monitoring of provider status pages with automated alerts

3. Fault Tolerance Isn't a Feature—It's a Requirement

Every architecture decision should ask: "What happens when this fails?"

Because it will fail. Not if, but when.

Design principles that matter:

- Deploy across multiple regions with automatic failover

- Build stateless applications that can scale and recover quickly

- Implement circuit breakers to prevent cascading failures

- Use message queues to decouple services

- Test your disaster recovery plans regularly (chaos engineering isn't optional anymore)

The Regulatory Storm That's Coming

Financial regulators worldwide are watching incidents like this closely. The EU's Digital Operational Resilience Act (DORA) already mandates that financial institutions assess concentration risk from cloud providers.

Expect similar requirements to expand to other sectors. Critical infrastructure, healthcare, and government services will face enhanced resilience standards.

Organizations that wait for regulation to force their hand will be playing catch-up while competitors who prepared early gain strategic advantages.

What I'm Telling My Clients

As someone who helps organizations architect cloud solutions, my recommendations have shifted from "nice to have" to "business critical":

Immediate actions (next 30 days):

- Conduct a dependency audit—map every service dependency and identify single points of failure

- Review your disaster recovery plan—when was the last time you actually tested it?

- Implement independent monitoring across all cloud providers

- Document your architecture with clear runbooks for common failure scenarios

Strategic investments (next 6-12 months):

- Begin multi-cloud pilot projects for critical workloads

- Train your team on multi-cloud operations and management

- Implement infrastructure-as-code for consistent deployments across providers

- Establish performance baselines and SLAs that account for provider outages

The Bottom Line

Yesterday's AWS outage revealed an uncomfortable truth: We've built our digital economy on a remarkably fragile foundation.

Three major providers—AWS, Azure, and Google Cloud—control the vast majority of cloud infrastructure. When one fails, the ripple effects are global and immediate.

This concentration risk isn't going away. But organizations that architect for failure, diversify dependencies, and maintain realistic expectations about system reliability will be positioned to maintain operations when the next outage occurs.

And there will be a next one.

The question isn't whether you can afford to implement multi-cloud strategies and comprehensive redundancy. It's whether you can afford not to.

What are your thoughts? How is your organization approaching cloud resilience? I'd love to hear what strategies you're implementing or challenges you're facing.

Zain Ahmed is a Cloud Solutions Architect specializing in multi-cloud infrastructure, DevOps engineering, and resilient system design. He helps organizations build scalable, fault-tolerant cloud architectures through his company MapleGenix.

Sources & Further Reading

Want to discuss this further?

I'm always happy to chat about cloud architecture and share experiences.